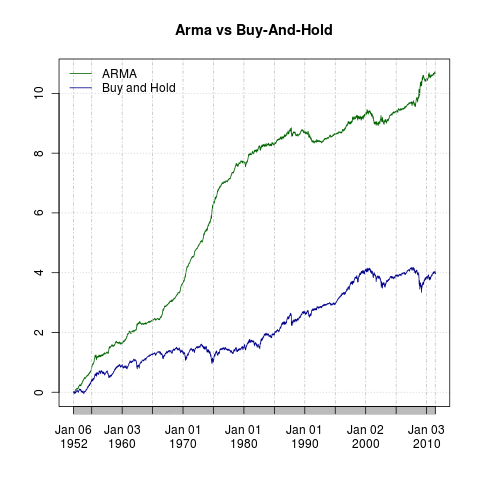

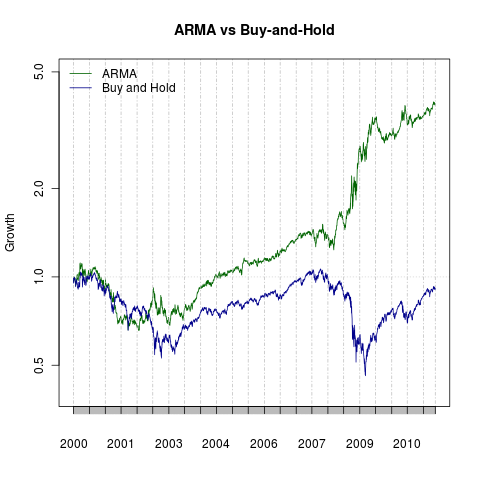

The system described in the earlier series for ARMA trading was in fact an “extreme” version of the more common, orthodox approach prevailing in the literature. Recently I tried using R to reproduce the results of a particular paper, and that lead to a lot of new developments …

How is typically ARMA trading simulated? The data is split into two sets. The first set is used for model estimation, an in-sample testing. Once the model parameters are determined, the model performance is tested and evaluated using the second set, the out-of-sample forecasting. The first set is usually a few times larger than the second and spans four or more years of data (1000+ trading days).

I wanted to be able to repeat the first step once in a while (weekly, monthly, etc) and to use the determined parameters for forecasts until the next calibration. Now, it’s easier to see why I classified my earlier approach as an “extreme” – it does the model re-evaluation on a daily basis. In any case, I wanted to build a framework to test the more orthodox approach.

To test such an approach, I needed to perform а “rolling” forecast (have mercy if that’s not the right term). Let’s assume we use weekly model calibration. Each Friday (or whatever the last day of the week is) we find the best model according to some criteria. At this point we can forecast one day ahead entirely based on previous data. Once the data for Monday arrives, we can forecast Tuesday, again entirely based on previous data, etc.

My problem was that the package I am using, fGarch, doesn’t support rolling forecasts. So before attempting to implement this functionality, I decided to look around for other packages (thanks god I didn’t jump to coding).

At first, my search led me to the forecast package. I was encouraged – it has exactly the forecast function I needed (in fact, it helped me figure out exactly what I need;)). The only problem – it supports only mean models, ARFIMA, no GARCH.

Next I found the gem – the rugarch package. Not only it implements a few different GARCH models, but it also supports ARFIMA mean models! I found the documentation and examples quite easy to follow too, not to mention that there is an additional introduction. All in all – a superb job!

Needless to say this finding left me feeling like a fat kid in a candy store (R is simply amazing in this regard!). Most likely you will be hearing about mew tests soon, meanwhile let’s finish the post with a short illustration of the rugarch package (single in-sample model training with out-of-sample forecast):

library(quantmod)

library(rugarch)

getSymbols("SPY", from="1900-01-01")

spyRets = na.trim( ROC( Cl( SPY ) ) )

# Train over 2000-2004, forecast 2005

ss = spyRets["2000/2005"]

outOfSample = NROW(ss["2005"])

spec = ugarchspec(

variance.model=list(garchOrder=c(1,1)),

mean.model=list(armaOrder=c(4,5), include.mean=T),

distribution.model="sged")

fit = ugarchfit(spec=spec, data=ss, out.sample=outOfSample)

fore = ugarchforecast(fit, n.ahead=1, n.roll=outOfSample)

# Build some sort of indicator base on the forecasts

ind = xts(head(as.array(fore)[,2,],-1), order.by=index(ss["2005"]))

ind = ifelse(ind < 0, -1, 1)

# Compute the performance

mm = merge( ss["2005"], ind, all=F )

tail(cumprod(mm[,1]*mm[,2]+1))

# Output (last line): 2005-12-30 1.129232

Hats down to brilliancy!